SUPER Cheap Ai PC – Low Wattage, Budget Friendly, Local Ai Server with Vision

Exploring the cheap end of AI we end up testing M2000 or K2200 and P2000s against CPU inference to see what local Ai performance looks like in the mid $100 price range. I thought going in it would be the K2200, but there is a twist to this so make sure you watch!

SUPER BUDGET AI RIG

Dell Optiplex 7050 (4.5 t/s)

Quadro M2000 4GB (11 t/s, better option)

Quadro K2200 4GB (7 t/s, look for M2000)

Quadro P2000 5GB (20 t/s, or follow the 350 build)

RIGS, GUIDES, SOFTWARE

350 AI server build

QUAD 3090 Rig

AI Hardware Tips and Tricks

AI Writeup

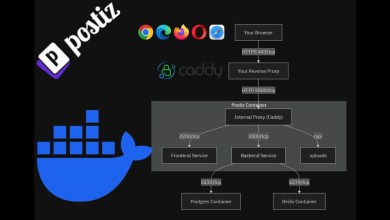

Proxmox LXC Docker Ollama Mega Guide

Chapters

0:00

0:27 Ai PC Parts

3:24 How To Start With AI Learning

4:33 K2200 on MiniCPM Vision Model

17:15 M2000 Al Testing

18:44 P2000 Testing

21:00 Conclusions

Be sure to 👍✅Subscribe✅👍 for more content like this!

Join this channel

Please share this video to help spread the word and drop a comment below with your thoughts or questions. Thanks for watching!

*****

As an Amazon Associate I earn from qualifying purchases.

When you click on links to various merchants on this site and make a purchase, this can result in this site earning a commission. Affiliate programs and affiliations include, but are not limited to, the eBay Partner Network.

*****

[ad_2]

source

Could you share that cat meme? 😅

My dual P40 + A2000 use 550w at idle lol Keeps me warm

Looking at the price: 2XP2000 will cost you around $200 (+shipping) while a new RTX 3060 12GB will cost you $284 from Amazon (+shipping), so for around $84, why should someone buy the 2 P2000 cards? I'm pretty sure that RTX3060 will smack out the dual P2000

19:53 – so would you recommend 3060's over 1080Ti's, or what kind of price would make 11GB Pascals an interesting value?

I have two titan xp's languishing. They may have a new purpose now.