Proxmox

Run Mistral, Llama2 and Others Privately At Home with Ollama AI – EASY!

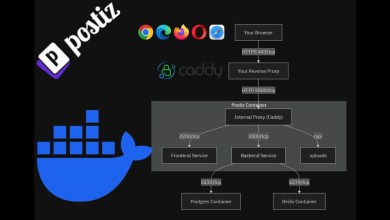

Self-hosting Ollama at home gives you privacy whilst using advanced AI tools. In this video I provide a quick tutorial on how to set this up via the CLI and Docker with a web GUI.

Ollama:

Video Instructions:

Recommended Hardware:

Discord:

Twitter:

Reddit:

GitHub:

00:00 – Overview of Ollama and LLMs

01:38 – Creating a VM

02:52 – Installation – CLI

05:50 – Installation – Docker

11:55 – Outro

[ad_2]

source