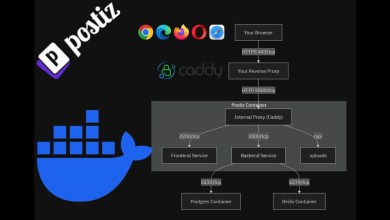

Proxmox Ai Homelab all-in-one Home Server – Docker Dockge LXC with GPU Passthrough

One server for the ultimate Homelab, multi 3090 GPU LXC and Docker host has turned into a really cool rig. I show you here the complete step by step setup from Proxmox to Nvidia Drivers to Nvidia Container Toolkit and then we setup a LXC to house a docker that handles GPU passthru with bare metal speeds. Then I install Ollama and Openwebui for us and test out the speeds of llama 3.1 vs bare metal. This tutorial gets you completely setup!

Written Article with all steps –

Ai Server Build –

Ai Server Build

GPU Rack Frame

Gigabyte MZ32-AR0 Motherboard

RTX 3090 24GB GPU (x4)

512GB DDR4 2400 RAM KIT

PCIe4 Risers (x4)

EPYC 7702

Corsair H170i ELITE CAPELLIX

SP3/TR4/5 Retention Kit

ARTIC MX4 Thermal Paste

CORSAIR HX1500i PSU

4i SFF-8654 to 4i SFF-8654 (x4)

HDD Rack Screws for Fans

Chapters

0:00 All in One Homelab Ai Server

1:34 Install Proxmox

4:28 Configure Host

5:49 Proxmox GPU Passthru options

8:42 NVIDIA Drivers for HOST

11:27 Docker LXC Install

13:26 Proxmox LXC GPU Docker Setup

20:58 Install Dockge and OpenwebUI

25:20 Setup Ollama and test llama 3.1

34:13 Wrapping Up

Be sure to 👍✅Subscribe✅👍 for more content like this!

Join this channel

Please share this video to help spread the word and drop a comment below with your thoughts or questions. Thanks for watching!

Digital Spaceport Website

🌐

🛒Shop (Channel members get a 3% or 5% discount)

Check out for great deals on hardware and merch.

*****

As an Amazon Associate I earn from qualifying purchases.

When you click on links to various merchants on this site and make a purchase, this can result in this site earning a commission. Affiliate programs and affiliations include, but are not limited to, the eBay Partner Network.

Other Merchant Affiliate Partners for this site include, but are not limited to, Newegg and Best Buy. I earn a commission if you click on links and make a purchase from the merchant.

*****

[ad_2]

source

Written Article with all steps – https://digitalspaceport.com/proxmox-multi-gpu-passthru-for-lxc-and-docker-ai-homelab-server/

how does scheduling the gpus work across docker containers? can you run multiple containers sharing gpus, or specific gpus, or will vram and compute allocation always start from 1st gpu, like shown at around 31:51 ?

bonus tip – name your storage by earlier characters of the alphabet, so installations will pick alphabetically desired drives automatically (for ex. b-ssd or installprox – which both are "before" local and local-zfs)

Love the video! Thanks for all the tips and tricks!

I love the ONE black fan aesthetic you keep rockin.

I've been battling with Proxmox the whole week! Looks like I'm able to make everything work except for a Capture Card from BM… The guest machines can see the card but none of the applications can make use of it without crashing on both Linux or Windows… Davinci Resolve or OBS none work..😢 (Help).

Put those crypto racks to use!

I wonder if you’ll be able to run parallel GPU tasks with this setup or only one request at a time. Or a gpu queue of some kind.

Yep, this is kinda what Im doing but I can only afford 1 3090 video card. I'll need to do some config comparisons with your build but I do notice my single 3090 proxmox/llama3.1 build sits at 160Watts when doing pretty much nothing at all. Keeps my Garage nice and toasty.

I did this with my 5950x and was working well but have upgraded to a Epyc 4004 and ECC, building it this weekend!! 🙂

with that many GPUs run tabbyAPI or vllm/aphrodite engine, it will be way faster

9:00 i reccomend the powershell 7 it is more fun to use 🙂

good stuff. thanks a bunch

How is the voice mode on open web ui? Is it identical to open AI’s?

I’m saving up to replicate this build for a voice chatbot that I can talk to constantly via ear piece for a disco elysium voice bot

Was initially building a dual 3090 pc for local LLM but after your vids decided to buy 2 more and go with your setup (and just wait for 5090 release for my general PC). Awesome stuff.

GREAT VIDEO!!!! Thank You this video will make my job a lot easier setting up my GPU server. This is how I was planning on setting up my GPU server. I've used Proxmox before and loved it for non-AI servers.

Just run the llama 405b model! Do it for the likes!

Are your Chia disk shelves offline?

Awesome guide! I was just starting to mess with LXCs, thanks!

Very nice video! This is becoming one of my favorite channels to watch on YouTube for tech stuff/linux stuff. Always interesting topics and very detailed explanations. It goes a long way for me for someone to break everything down step by step and explain why. Thank you

You could easily trade crypto with this setup :-). Cool and interesting video.

Nice video! Gonna save it so i can try it too. I'm learning about machine learning and can't wait to be at this level.

🎉