My New Lab Server: Dell R740XD

Tech Supply Direct Offer Discount

StorageReview Dell Article

Connect With Us

—————————————————

+ Hire Us for a project:

+ Toms’ Twitter 🐦

+ Our Website

+ Our Forums

+ Instagram

+ Facebook

+ GitHub

+ Discord

Lawrence Systems Shirts and Swag

—————————————————

►👕

AFFILIATES & REFERRAL LINKS

—————————————————

Amazon Affiliate Store

🛒

UniFi Affiliate Link

🛒

All Of Our Affiliates help us out and can get you discounts!

🛒

Gear we use on Kit

🛒

Use OfferCode LTSERVICES to get 10% off your order at

🛒

Digital Ocean Offer Code

🛒

HostiFi UniFi Cloud Hosting Service

🛒

Protect your privacy with a VPN from Private Internet Access

🛒

Patreon

💰

Chapters

00:00 New Lab Server

02:30 Dell R740XD options

03:00 R740XD Raid and NVME Drives

03:38 Dell iDrac9 Management

05:50 Hardware Specs

06:50 Wattage and Power Capacity

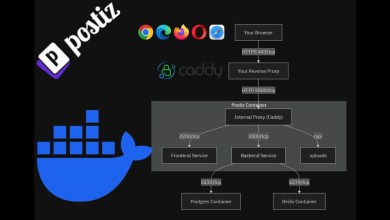

07:40 My XCP-ng Lab Software and Networking

[ad_2]

source

I've got one R740 running in my homelab. It's a non-XD version. I picked the one version without drivebays even, because I won't use them anyway. My storage are 2 Intel 4TB NVMe drives interally.

The best part of the machine is, that it's running idle on 56w.

Specs:

1x Xeon 6132 (14c/28t @ 2,6Ghz)

192GB DDR4-2400

2x Intel P4510 4TB on an AliExpress U.2 to PCIe PCB

Dell rNDC SFP+ NIC

1x 750w PSU

Running the newest Proxmox. But I only use it for testing, so it's off when I don't need it.

Unless it's decommissioned servers for a lab use it's hard to recommend Dell hardware with all the proprietary limitations in the hardware/BIOS whitelisting. Just get a Supermicro server and avoid the headache all together.

Nice!

I bought an R730XD (12x 3.5") from Techsupply direct back in 2020 for my home NAS and it's been rock solid, well worth it.

I kinda wish we, as a community, had a good pool of info on reusing these chassis.

I'd love to know how far back I can go if I want NVMe connectivity, and I'm willing to strip the chassis down to the backplane/cages.

I recently got my hands on a bunch of 4/8TB U.2 drives for very little money, and now I'm getting sticker-shock at just how much money it's going to cost to even connect them.

A $80 cage + $25 cable is hard to justify to attach a $50 drive…

I can confirm Proxmox comparability. I run eight of them in a SMB cluster.

Yeah ….. a ver y small server at home….. 😊 I want to buy twelve units ….. thank you for your información billy gates junior !!!!

Gotta disagree with the Intel vs Broadcom argument. My experience is Intel requires offloads to get the performance they do, my annoyance is when those "smarts" aren't very smart and instead get in the way. A simple example recently is an Intel NIC and VLANs, I have an 82599ES that in no way can I get it to pass VLANs (trunk) running proxmox (currently 8.2.7). A BCM5709 (onboard in the same system) is working perfectly fine, as is a BCM57840 (in a different proxmox node).

I don't need my hardware trying to be smart and instead being retarded and wasting my time, for high pps Intel is supposed to be faster (can't say the system name but the software maker insisted on Intel), but for every case where we have it all of those offloads are pointless as they are all plugged into access ports and the network devices deal with anything fancy (VLANs, VxLAN, etc). Maybe with VMWare the drivers handle things properly but the linux drivers are crap (I've made the mistake of vlans on a windows machine once for a hyper-V setup and never touching that again).

The platinum YouTube plaque on the wall behind you isn't level Tom. My OCD taking over.

LOVE your videos, thank you for all the hard work you do for us for free.

I have purchased a good number of the R740XD's from Tech Supply Direct. I do a little extra analysis when deciding on which CPU's to use, by creating a quick spreadsheet of the CPU's and price. Then Google each one, and click the CPU benchmark link to see what the Multithread and Single Thread ratings are. Once complete I do some sorting to find the best performance / price. It's a bit of an eye opener as you can usually see substantial price difference in some models, with very little difference in actual performance.

Tom, I'm curious, what would stop you from using this as a primary system?

Lol… Cascade Lake? In 2024? So basically a 10+ year old platform. Slow DDR4, only 28 lanes of PCIe Gen3, and up to 28 cores per CPU.

Whyyyyyyyyyyyyyyyyyy?

Any way these can be less power hungry, like 100w

I run an R340 in my homelab. Same generation but quite a bit lower in power usage. Only downside is they top out at 64GB of RAM but for homelab use they're excellent.

Dell has always been a reliable server platform for years. I've built a lot of those units and older ones for some clients. Now, my personal lab is built under Cisco Hyperflex C240xM5, which BTW are terrific, I've got 3 in an auction, fully loaded last year. They are rocking Proxmox Right now.

I hope to have the time to test XCP-Ng.

I had one of these at a company. We got a $40k server for $16k as it was stock that Dell needed to get rid of. It was an excellent server.

Run a shitload of refurbished 740s for only a 3rd of the price of new 750s without any performance hits. Love to build clusters with 20 or 24 R740s instead of 16 R750s. Better performance for my customers, less investment. And, also, can get them in a few days instead of weeks/months.

I chose to go the second-hand HP route. I've had about a dozen over the past 10 years spanning Gen6 – Gen10. The good new is that my therapist says we are close to uncovering the early traumatic event that sent me down the road to self-flagellation.

Funny timing, I have about 10 of these I'd like to sell! Send people my way! Unless you'd like some options for your clients.

"Really fast NVME", like how fast? Would be great to see what you achieved with ZFS. Fast like one nvme drive (vlog raidz1)?

Got the r740xd a couple of months ago, running unRAID on it and installed a arc770 with som mods to fit

What's wrong with Broadcom? I have the Broadcom Adv. Dual 10GBASE-T Ethernet and Broadcom Gigabit Ethernet BCM5720 — they work ootb for my Proxmox setup. Infact I never had any problems with any broadcom, at least with Proxmox as my basis.

Oth, I have R440s, and R740 (8 2.5 SAS drive bay) as well — all great, and running same 6138 CPUs, yeah — it sucks power, mine around 189 watts for each R440 (single CPU), and 740 (running dual CPU) – to around 280watts.

Hence, I'm planning for solar powering my homelab.

Intel cpus? What would Wendell say about that? Unless you pay per core like in vmware, I don't see any reason choosing Intel these days.

Gen 3 PCI-E 3.0 Intel based CPU

Personally I feel like the glory days of rackmount servers for the homelab are behind us. Power consumption is already way too high and only getting higher, and the systems are just getting to be complete overkill with a single host being capable of what 3-4 older boxes used to be. That and newer desktop CPU's are crazy fast and efficent, high capacity dimms let you pack a ton of ram into a tiny system. I had a 24u rack with a few R*20 systems, disk shelf, multiple switches, tape library etc. Sold it all and replaced it with a albeit expensive but tiny sff ryzen system in a NAS case that idles at ~80w with half a dozen disks and have not looked back at all. Although I did keep the tape library, that things dope af.

now i want one, my r710 when i still used it sometime used 300w

Avoid Broadcom is the best advice on so many levels… We have deployed 740xds a lot lately for internal use and clients. It can be amazing for TrueNAS if you load in some SSD, NVMe Flash, and 16 3.5" big drives with the mid mount.

I got lucky in picking up a free T640 with 8 x 1.94TB Intel SSD's. Picked up a BOSS card for boot and then used the rest of the PCI slots for NVME drives. I have 2 x16's left for future expansion in the front with U.2's. It had two 4110's but I swapped them for two 5120's for core density. With all SSD storage it runs low 200 watts for the homelab. Fun chassis.

XD

2 questions, how much you paid for the server and which one is better XCP or PVE?

Tom if you spec’ing out a new xcp-ng system would you prefer all in one like the unit you have here (host+storage) or a host (compute server) and a separate storage,like an iX systems with NFS shares?

Why do you use xcp over pve?

Strongly recommend you look at the r7515s. AMD epic, one cpu

Looks like iDrac 9 is a lot snappier than 7 and 8. Good to see.

Nice!

Dude, you got a Dell.

Still rocking a R720, been in service for around 8 years now, runs around 110-140w at low load. Currently it just runs my PfSense VM. Not much else I can use it for without it being too slow to be reasonable. Most AI programs wont run due to missing instruction sets, no HW video decoding so I can't make it a decent plex box without investing in a GPU (Already have an intel CPU server that I use HW decoding with anyways), and it's way too slow for most other tasks that would benefit from lots of cores but low core clock, such as 3D modeling large fluid simulations (also needs a GPU,)

Love these servers! Have two of them in my home lab running vSphere! Great video!

I with I had gotten a R740xd instead of my T7820 for my main home server…It's the same hardware (minus the NIC daughter board and idrac) but I didn't plan on wiring my condo or getting a rack at the time, so I went wit the tower for noise reasons.

Now, technically speaking there is a rack conversion kit for it…but I've been trying to find one for nearly a year. I do love the R730xd with the 12 3.5" bays that I'm using for TrueNAS though.

Who makes your glasses?

I wish that network module was available as a pcie card!

definitely would be a great backup machine you could have on a few hours a day or days a month.