REFLECTION Llama3.1 70b Tested on Ollama Home Ai Server – Best Ai LLM?

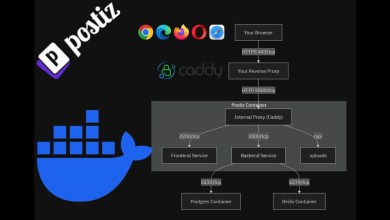

The buzz about the new Reflection Llama 3.1 Fine Tune being the worlds best LLM is all over the place, and today I am testing it out with you on the Home Ai Server that we put together recently. Running in Docker on a LXC in proxmox on my Epyc quad 3090 GPU Ai Rig. Ollama got out support for this fast, and thankfully they did! Some surprising results and unexpected results in the mix. You can run the 8q version with 4 24GB GPUs and the 4q version with 2 24GB GPUs (or some combo of gpus to hit ~40GB VRAM)

Ai Server Build Guide –

Proxmox Docker LXC GPU Ai Server Setup –

Bare Metal Ai Server Setup Guide –

GROK vs LLAMA 3.1 70 Stock –

Reflection –

Ollama Reflection Model –

The Tweet –

Ai Server Build

GPU Rack Frame

Gigabyte MZ32-AR0 Motherboard

RTX 3090 24GB GPU (x4)

256GB DDR4 2400 RAM

PCIe4 Risers (x4)

EPYC 7702

Corsair H170i ELITE CAPELLIX

SP3/TR4/5 Retention Kit

ARCTIC MX4 Thermal Paste

CORSAIR HX1500i PSU

4i SFF-8654 to 4i SFF-8654 (x4)

HDD Rack Screws for Fans

Be sure to 👍✅Subscribe✅👍 for more content like this!

Join this channel

Please share this video to help spread the word and drop a comment below with your thoughts or questions. Thanks for watching!

Digital Spaceport Website

🌐

🛒Shop (Channel members get a 3% or 5% discount)

Check out for great deals on hardware and merch.

*****

As an Amazon Associate I earn from qualifying purchases.

When you click on links to various merchants on this site and make a purchase, this can result in this site earning a commission. Affiliate programs and affiliations include, but are not limited to, the eBay Partner Network.

Other Merchant Affiliate Partners for this site include, but are not limited to, Newegg and Best Buy. I earn a commission if you click on links and make a purchase from the merchant.

*****

[ad_2]

source

Yes run the fp 16 please. Provide hardware specs

Don’t you need to open a new chat window for each test? it will be using a long prompt window otherwise.

Thanks for the detailed video so quickly after this release! FYI, I saw an interview with the creators of Reflection Llama on the Matthew Berman YouTube channel and they mentioned that it doesn’t always use reflection… only with questions that are hard enough to warrant it. Also, they mentioned that they hadn’t tested how well the quantized versions would perform with <think> and <reflect>. They did zero testing with the quantized version, so you are exploring uncharted territory 👍

Waiting on my second 3090 just for this

Also, I'm a bit concerned you run all questions in a single convo. I've heard models degenerating over time

Are you ex navy?

Extra Threadripper. Wish I had that problem. haha

did you use the system prompt for reflecting?

Ух ты! Качество ваших видео, визуальные эффекты и подача невероятно красивы и очень интересны. Спасибо и хороших выходных. Привет из Бразилии, БР.